Is it worth having a dedicated AI forum - not sure where - but a few examples of some of the issues facing us (you lot) in the future:

blog.coupler.io

blog.coupler.io

www.forbes.com

www.forbes.com

www.pewresearch.org

www.pewresearch.org

This last being rather long and which I haven't read completely.

What do you think?

11 Artificial Intelligence Issues You Should Worry About | Coupler.io Blog

Be aware of 11 threats, challenges, and issues with artificial intelligence and learn how to deal with them.

12 Risks and Dangers of Artificial Intelligence (AI)

Artificial intelligence has potential, but the dangers of AI have grabbed more attention in recent years. Check out 12 dangers of artificial intelligence.

builtin.com

The 15 Biggest Risks Of Artificial Intelligence

There's a dark side to AI. Learn about the potential negative consequences of this transformative technology and how we can help ensure a safer and more balanced future.

www.forbes.com

www.forbes.com

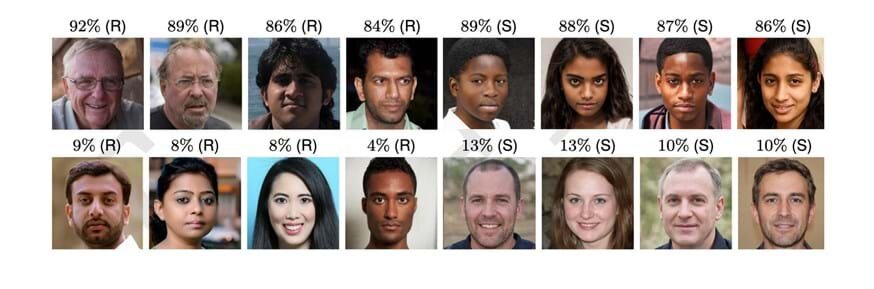

1. Worries about developments in AI

It would be quite difficult – some might say impossible – to design broadly adopted ethical AI systems. A share of the experts responding noted that

This last being rather long and which I haven't read completely.

What do you think?